Draw Like Bob Ross (With Pytorch!)

I've been playing around with autoencoders, and have been fully fascinated with the idea of using one in a cool, fun project, and so drawlikebobross was born.

What is drawlikebobross?

drawlikebobross aims to turn a patched color photo into a Bob Ross styled photo, like so:

Basically turning rough color patches into an image that (hopefully) looks like it could be drawn from Bob Ross.

It also includes a nice little web app for you to test things out :-)

How we do what we do

Scrapping Data

Before we start diving into anything, we first need data. Fortunately, a quick google search on “Bob Ross Datasets” results in this website: twoinchbrush.

Whats so great about this website is that it lists all its Bob Ross images in a nice, scrapable format:

https://www.twoinchbrush.com/images/painting1.png

https://www.twoinchbrush.com/images/painting2.png

https://www.twoinchbrush.com/images/painting3.png

https://www.twoinchbrush.com/images/paintingN.png

A quick and easy shell script finishes the job.

Preprocessing Data

As our challenge here is to convert color patches into Bob Ross styled drawings, I've decided to use mean shift filtering to smoothen the images in order to resemble color patches as inputs, and the original image as the output:

To minimize the training time, I've preprocessed the bulk of images into smoothen images and stored them in a h5 format. This allows me to rapidly test different neural network architectures without having to preprocessed the data during training time, which is a huge time saver.

Neural Network Architecture

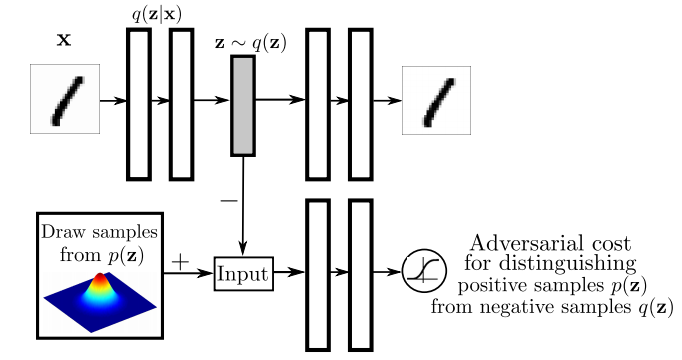

The network architecture I'm using is called an Adversarial Autoencoder, or aae for short. You can read more about them here, original paper here

TL;DR: “The idea I find most fascinating in this paper is the concept of mapping the encoder’s output distribution q(z|x) to an arbitrary prior distribution p(z) using adversarial training (rather than variational inference).” - Hendrik J. Weideman

AAE Figure

Feeding Data Into Our Model + Pytorch

So we want color patches coming in, and Bob Ross styled images coming out, the process should now look like:

I've chosen to use pytorch to implement the model in because I've been using it tons in my work, as it has a super pleasant and consistent API (looking at you tensorflow), and I just feel like my productivity has increase tenfolds.

The model pipeline has also been abstracted into 4 components:

- models.py: Architecture of the Neural Network

- loader.py: Dataloader

- trainer.py: The training procedure for the Network

- train.py: Run this file to initiate training

This way, if I wanted to say change the architecture of the network, all I need to do is edit models.py and trainer.py, and we're good to go!

Training

Now the longer we train, the better the network gets at Bob Rossifying our color patches:

Since I'm using a thinkpad t460s with a shitty GPU, I rented a g2 on AWS and chucked the model there for around a day or so. (Got to around 2.5k epochs).

Final thoughts

I had a ton of fun making this lil project, if machine learning interests you, I would highly recommend doing a small weekend project like so.

Also, did I mention that the project has a web UI to interact with the model? ;-)